GenRM: Generative Reward Models - A Unified Approach to RLHF and RLAIF

Generative Reward Models (GenRM) is a novel framework that combines RLHF and RLAIF to better align LLMs with human preferences, outperforming classical methods by up to 45%.

GenRM: GENERATIVE REWARD MODELS

Reinforcement Learning from Human Feedback (RLHF) has greatly improved the performance of modern Large Language Models (LLMs). The RLHF process is resource-intensive and technically challenging, generally requiring a large collection of human preference labels over model-generated outputs. Reinforcement Learning from AI Feedback (RLAIF) addresses this data collection challenge by leveraging synthetic preferences generated by an LLM. However, recent work has shown that synthetic preferences labels may not align well with human preference judgments.

To address this, we propose a hybrid approach that unifies RLHF and RLAIF methodologies. We introduce GenRM, an iterative algorithm that trains an LLM on self-generated reasoning traces, leading to synthetic preference labels matching human preference judgments. Empirically, we show that zero-shot LLM-based judgments under-perform compared to Bradley-Terry reward models on in-distribution tasks (between 9-36%). In contrast, GenRM achieves in-distribution accuracy comparable to Bradley-Terry models, while significantly outperforming them on out-of-distribution tasks (between 10-45%). Moreover, GenRM surpasses the performance of using LLMs as judges on both in-distribution (by 9-31%) and out-of-distribution tasks (by 2-6%).

Our results show that combining the strengths of RLHF and RLAIF offers a promising approach for improving the quality of synthetic preference labels.

Introducing Generative Reward Models

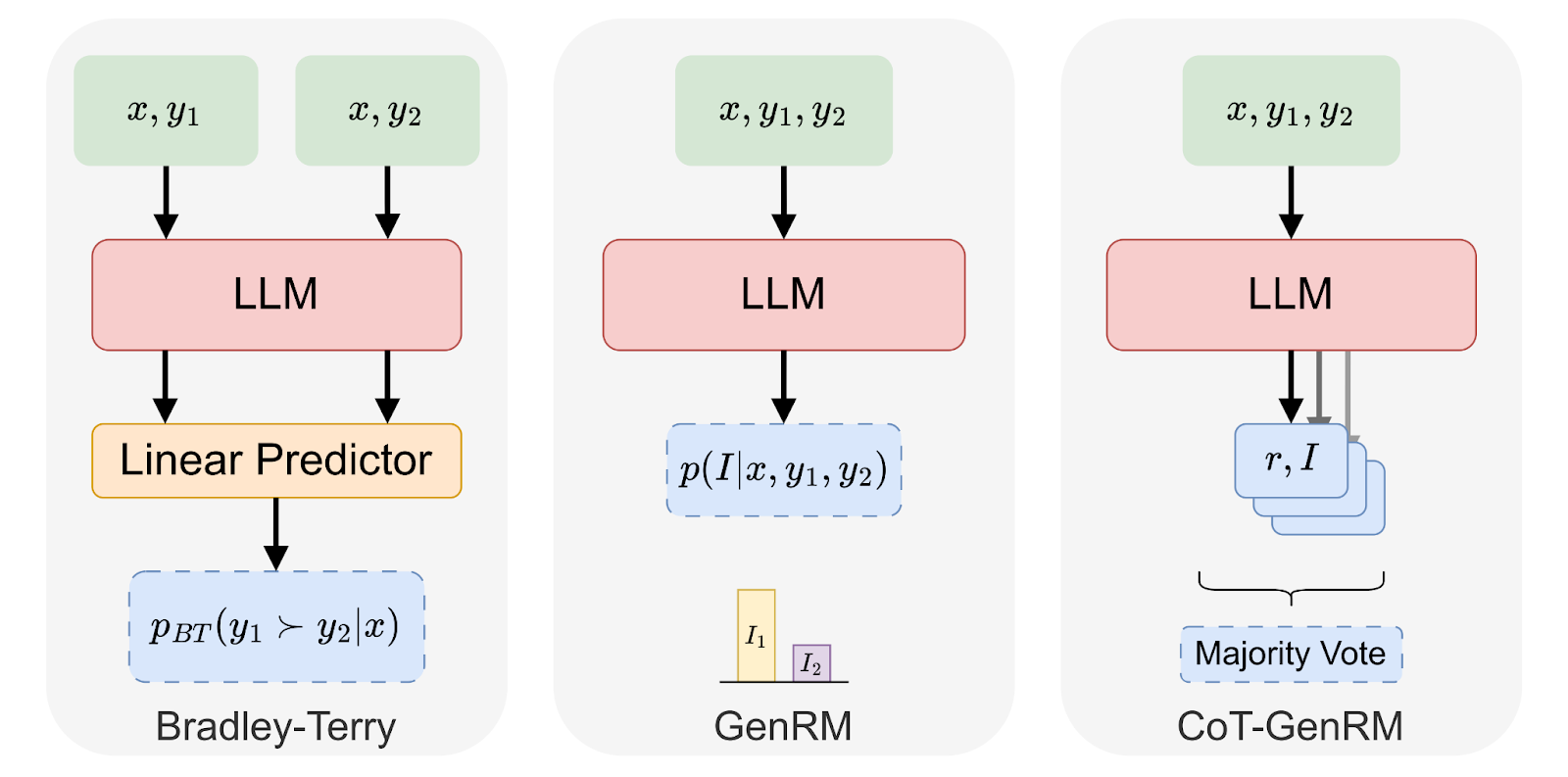

We're excited to share our research lab's latest work on LLM post-training performance through a novel approach called Chain-of-Thought Generative Reward Models (CoT-GenRM). This work combines the strengths of human feedback and AI-generated feedback, with a focus on reasoning-based decision-making, to create more robust and generalizable AI systems.

The Post-training Challenges of Performance & Alignment

As AI systems become more advanced, ensuring they align with human values and preferences becomes increasingly crucial. Two main approaches have emerged to tackle this challenge:

- Reinforcement Learning from Human Feedback (RLHF): This method uses human preferences to guide AI behavior but can be resource-intensive and limited in scale.

- Reinforcement Learning from AI Feedback (RLAIF): This approach uses AI-generated preferences, which can be more scalable but may not always align perfectly with human judgments.

Methodology: CoT-GenRM and STaR-DPO

Our research introduces Chain-of-Thought Generative Reward Models (CoT-GenRM) as a hybrid approach that combines the best of both worlds, with a crucial emphasis on reasoning. Here's how it works:

- We start with a large language model (LLM) as a base evaluator.

- We incorporate reasoning to improve the model's decision-making process, allowing it to provide justifications for its judgments.

- Using a dataset of human preferences, we identify correct and incorrect judgments made by the model, which are then used to fine-tune the LLM to better align with human preferences.

STaR-DPO: A Key Innovation

A key innovation in our approach is the STaR-DPO method, which stands for Self-Taught Reasoner with Direct Preference Optimization. This method combines two powerful techniques:

- Self-Taught Reasoner (STaR): This iterative approach allows the model to improve its own reasoning capabilities by learning from its previous outputs.

- Direct Preference Optimization (DPO): This method directly optimizes the language model to align with preference data, simplifying the training process.

Comparison: STaR-DPO vs LLM-as-a-judge

User: Name two animal species that live in the ocean. [The Start of Assistant A's Answer] Dolphin and shark. [The End of Assistant A's Answer] [The Start of Assistant B's Answer] Common ocean animals include sharks, whales, and dolphins. [The End of Assistant B's Answer]

An example where LLM-as-a-judge fails to provide an accurate judgement, but STaR-DPO succeeds. Sentences where critical reasoning takes place are highlighted for emphasis.

Key Findings

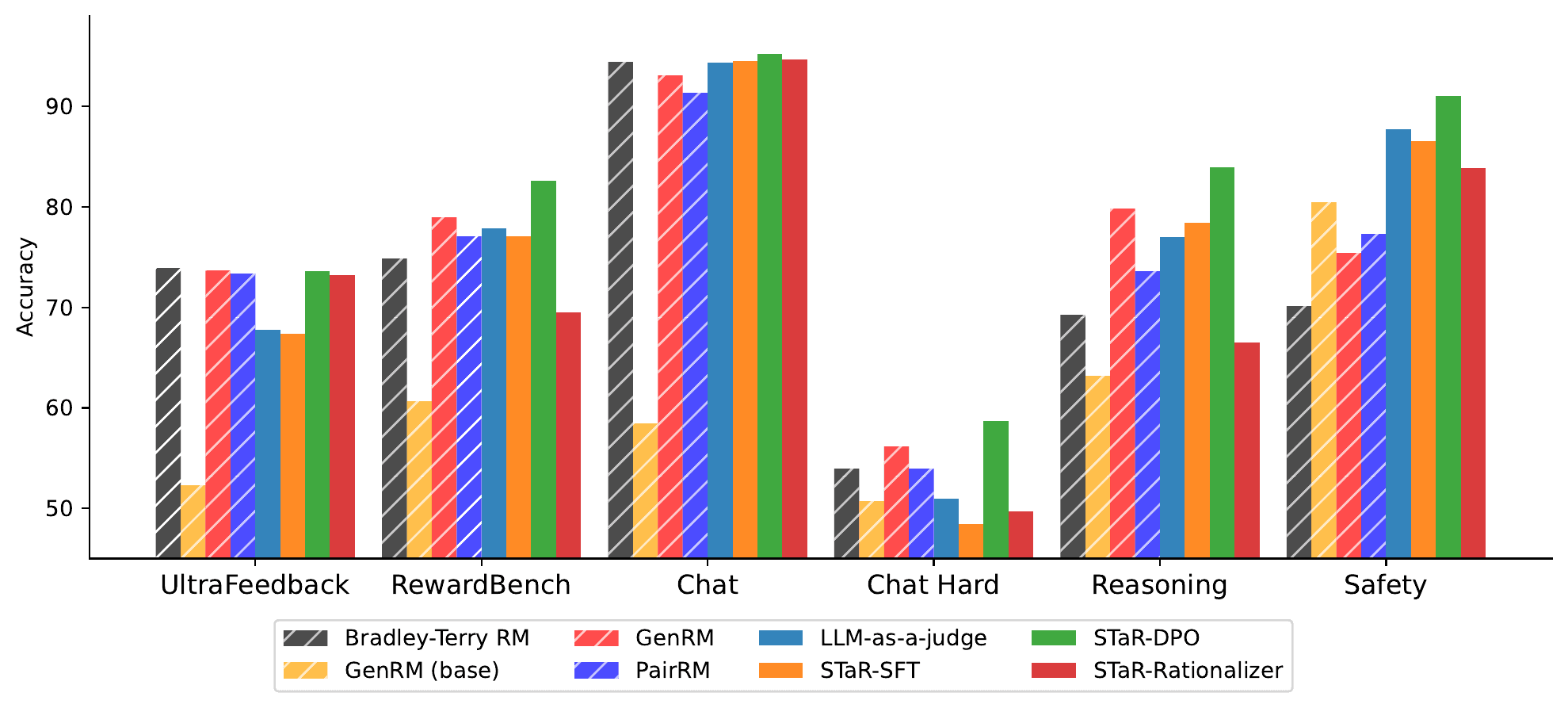

Our experiments with CoT-GenRM and STaR-DPO yielded some exciting results:

- Comparable Performance In-Domain: CoT-GenRM matches the performance of traditional reward models on in-distribution tasks.

- Better Generalization Out-of-Domain: On out-of-distribution tasks, CoT-GenRM significantly outperforms existing methods.

- Reasoning Matters: The reasoning component in CoT-GenRM significantly boosts performance, especially on complex tasks.

- Scalability: CoT-GenRM reduces the need for extensive human annotation, making it more scalable for real-world applications.

- Simplified Training: GenRMs allow for effective training without the need for a separate reward model.

Performance Comparison

UltraFeedback Subsets

Figure 1: Comparing generative reward models with prior reward modeling methods on in-domain (UltraFeedback) data and out-of-domain data (RewardBench). All generative model scores are the result of a majority vote over 32 samples.

Implications and Future Directions

The development of Chain-of-Thought Generative Reward Models and STaR-DPO opens up new possibilities for AI alignment:

- More Robust AI Systems: Create AI systems that better generalize to new situations and maintain alignment with human values.

- Efficient Scaling: Allow for more rapid iteration and refinement of AI behavior.

- Potential for Personalization: Address the challenge of aligning AI with diverse and potentially conflicting human views.

- Improved Reasoning Capabilities: Pave the way for AI systems that can continually improve their own reasoning and decision-making processes.

Future Research Directions

- Refining post-rationalization techniques to improve the quality of AI-generated reasoning.

- Investigating online optimization methods for real-time adaptation to new feedback.

- Extending CoT-GenRM and STaR-DPO to handle multimodal tasks.

- Addressing robustness in adversarial settings.

- Exploring the potential of STaR-DPO for continuous learning and adaptation in deployed AI systems.

Pushing New AI Boundaries

Exciting new possibilities with generative reward models:

- Significant performance gains, especially in out-of-distribution scenarios

- Adaptive AI that evolves with your domain's unique challenges

- Personalization at scale through pluralistic preference modeling

- Localization capabilities for global AI deployment

- New avenues for multimodal AI and advanced reasoning capabilities

Interested in exploring how GenRM could enhance your AI?